036.Binder 死亡通知情景分析

036.Binder 死亡通知情景分析

本文基于 android10_r41 版本分析。

# 死亡通知的基本流程

死亡通知是为了让 Bp 端(客户端进程)能知晓 Bn 端(服务端进程)的生死情况,当 Bn 端进程死亡后能通知到 Bp 端。

分析源码之前,我们需要明确"死亡通知"实际是一个回调过程:

- 客户端,构造并保存好“死亡回调对象”,接着把“死亡回调对象”发送给驱动

- 驱动保存好“死亡回调对象”,同时记录好“死亡回调对象”对应的客户端和服务端

- 当服务端进程“挂掉”了,会执行进程的清理函数,清理函数会调用到 binder 驱动的清理函数,驱动清理函数会找到使用了服务端服务的客户端,把服务端死亡消息发送给客户端,客户端调用死亡通知回调

# 死亡通知的注册

这里我们以 AMS 为例,来看看死亡通知的注册过程,AMS 服务对应的客户端程序通过调用 attachApplication 方法来注册死亡通知:

// aidl 中定义的接口

// frameworks/base/core/java/android/app/IActivityManager.aidl

void attachApplication(in IApplicationThread app, long startSeq);

// frameworks/base/services/core/java/com/android/server/am/ActivityManagerService.java

// 接口的服务端实现

// 这是一个远程过程调用函数的服务端实现(Bn端实现)

@Override

public final void attachApplication(IApplicationThread thread, long startSeq) {

if (thread == null) {

throw new SecurityException("Invalid application interface");

}

synchronized (this) {

int callingPid = Binder.getCallingPid();

final int callingUid = Binder.getCallingUid();

final long origId = Binder.clearCallingIdentity();

// 具体功能是通过调用 attachApplicationLocked

attachApplicationLocked(thread, callingPid, callingUid, startSeq);

Binder.restoreCallingIdentity(origId);

}

}

// IApplicationThread 是客户端传递给服务端的匿名 Binder(或者叫 Binder 回调),当前位置是一个 Bp 代理对象

// 实际调用的是 attachApplicationLocked

// frameworks/base/services/core/java/com/android/server/am/ActivityManagerService.java

private boolean attachApplicationLocked(@NonNull IApplicationThread thread,

int pid, int callingUid, long startSeq) {

ProcessRecord app;

//......

try {

//构造死亡通知回调对象

AppDeathRecipient adr = new AppDeathRecipient(app, pid, thread);

// 给匿名 Binder 服务 IApplicationThread,注册死亡通知到内核

// thread.asBinder() 返回的是一个 BinderProxy 对象

// 接着调用 linkToDeath 注册死亡通知

thread.asBinder().linkToDeath(adr, 0);

app.deathRecipient = adr;

} catch (RemoteException e) {

app.resetPackageList(mProcessStats);

mProcessList.startProcessLocked(app,

new HostingRecord("link fail", processName));

return false;

}

//......

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

linkToDeath 是一个 native 方法:

// frameworks/base/core/java/android/os/BinderProxy.java

public final class BinderProxy implements IBinder {

public native void linkToDeath(DeathRecipient recipient, int flags)

throws RemoteException;

}

2

3

4

5

其第一个参数是 AppDeathRecipient ,是一个死亡通知回调对象,其实现如下,其核心是 binderDied 方法,当服务端进程挂掉时,就会回调该对象的 binderDied 方法,具体怎么调用的,我们稍后来做分析:

private final class AppDeathRecipient implements IBinder.DeathRecipient {

final ProcessRecord mApp;

final int mPid;

final IApplicationThread mAppThread;

AppDeathRecipient(ProcessRecord app, int pid,

IApplicationThread thread) {

if (DEBUG_ALL) Slog.v(

TAG, "New death recipient " + this

+ " for thread " + thread.asBinder());

mApp = app;

mPid = pid;

mAppThread = thread;

}

@Override

public void binderDied() {

if (DEBUG_ALL) Slog.v(

TAG, "Death received in " + this

+ " for thread " + mAppThread.asBinder());

synchronized(ActivityManagerService.this) {

appDiedLocked(mApp, mPid, mAppThread, true);

}

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

linkToDeath 对应的 JNI 函数是:

// frameworks/base/core/jni/android_util_Binder.cpp

static void android_os_BinderProxy_linkToDeath(JNIEnv* env, jobject obj,

jobject recipient, jint flags) // throws RemoteException

{

if (recipient == NULL) {

jniThrowNullPointerException(env, NULL);

return;

}

//拿到 BinderProxy 对应的 BpBinder 对象

BinderProxyNativeData *nd = getBPNativeData(env, obj);

// target 实际类型是 BpBinder

IBinder* target = nd->mObject.get();

LOGDEATH("linkToDeath: binder=%p recipient=%p\n", target, recipient);

if (!target->localBinder()) { //进入 if 分支

// 获取DeathRecipientList: 其成员变量 mList 记录该 BinderProxy 的 JavaDeathRecipient 列表信息

// List 的存在,说明一个 BpBinder 可以注册多个死亡回调

DeathRecipientList* list = nd->mOrgue.get();

//创建 JavaDeathRecipient 对象

sp<JavaDeathRecipient> jdr = new JavaDeathRecipient(env, recipient, list);

// 向驱动注册死亡通知

status_t err = target->linkToDeath(jdr, NULL, flags);

if (err != NO_ERROR) {

// Failure adding the death recipient, so clear its reference

// now.

jdr->clearReference();

signalExceptionForError(env, obj, err, true /*canThrowRemoteException*/);

}

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

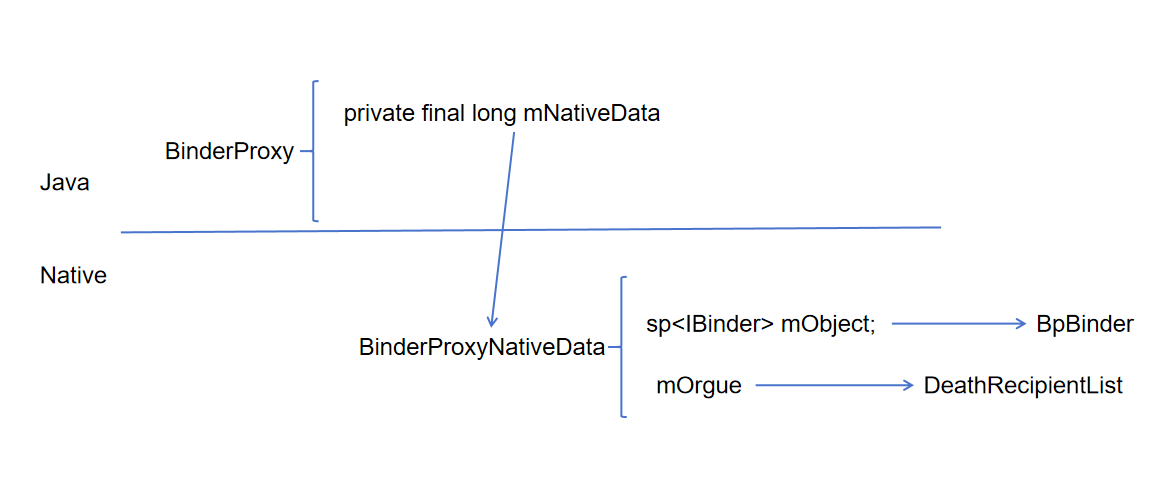

这里使用到了 BinderProxy 的一些内部数据,回顾一下Binder Java 层服务注册过程分析 (opens new window)中介绍的 BinderProxy 的结构:

BinderProxy 类涉及 Java 和 Native 两层:

接着我们再来看看 native 层的回调对象 JavaDeathRecipient:

// frameworks/base/core/jni/android_util_Binder.cpp

// 接下来我们看看 JavaDeathRecipient 对象的初始化过程

class JavaDeathRecipient : public IBinder::DeathRecipient

{

public:

// object 类型是 AppDeathRecipient,即 Java 层的回调对象

JavaDeathRecipient(JNIEnv* env, jobject object, const sp<DeathRecipientList>& list)

: mVM(jnienv_to_javavm(env)), mObject(env->NewGlobalRef(object)), //JavaDeathRecipient 保存到 mObject 成员变量中

mObjectWeak(NULL), mList(list)

{

// These objects manage their own lifetimes so are responsible for final bookkeeping.

// The list holds a strong reference to this object.

LOGDEATH("Adding JDR %p to DRL %p", this, list.get());

//将当前对象sp添加到列表 DeathRecipientList

list->add(this);

gNumDeathRefsCreated.fetch_add(1, std::memory_order_relaxed);

gcIfManyNewRefs(env);

}

//......

};

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

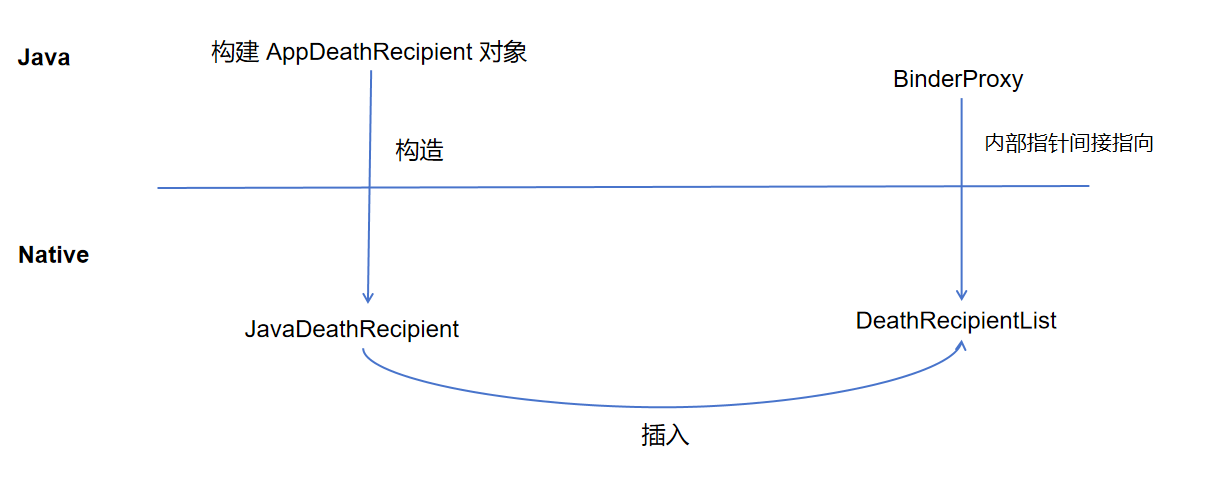

JavaDeathRecipient 构造函数主要就是把死亡回调对象 JavaDeathRecipient 对象自己加入到 DeathRecipientList 中,把 Java 层的 AppDeathRecipient 对象保存到 mObject 成员中,其他都是一些打印或者引用操作。

整个过程如下图所示:

我们接着看 status_t err = target->linkToDeath(jdr, NULL, flags); 的实现:

// 向驱动注册死亡回调

// frameworks/native/libs/binder/BpBinder.cpp

status_t BpBinder::linkToDeath(

const sp<DeathRecipient>& recipient, void* cookie, uint32_t flags)

{

//构建 Obituary 对象,将回调对象保存在其内部

Obituary ob;

ob.recipient = recipient;

ob.cookie = cookie;

ob.flags = flags;

LOG_ALWAYS_FATAL_IF(recipient == nullptr,

"linkToDeath(): recipient must be non-NULL");

{

AutoMutex _l(mLock);

if (!mObitsSent) { // 没有执行过 sendObituary,则进入该方法

if (!mOb ituaries) { //只向驱动发送一次

mObituaries = new Vector<Obituary>;

if (!mObituaries) {

return NO_MEMORY;

}

ALOGV("Requesting death notification: %p handle %d\n", this, mHandle);

getWeakRefs()->incWeak(this);

IPCThreadState* self = IPCThreadState::self();

// 关注点1

//构建好数据

self->requestDeathNotification(mHandle, this);

// 关注点2

//将构建好的数据发送给驱动

self->flushCommands();

}

ssize_t res = mObituaries->add(ob); //把新构建的 Obituary 添加到 BpBinder 的 mObituaries 成员中

return res >= (ssize_t)NO_ERROR ? (status_t)NO_ERROR : res;

}

}

return DEAD_OBJECT;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

接着看关注点 1:

status_t IPCThreadState::requestDeathNotification(int32_t handle, BpBinder* proxy)

{

mOut.writeInt32(BC_REQUEST_DEATH_NOTIFICATION);

mOut.writeInt32((int32_t)handle);

mOut.writePointer((uintptr_t)proxy);

return NO_ERROR;

}

2

3

4

5

6

7

将需要发送给驱动的数据写入 Parcel mOut:

- BC_REQUEST_DEATH_NOTIFICATION:命令

- handle:目标服务对应的 handle 值

- proxy:指向客户端 BinderProxy 的指针

接着看关注点 2:

void IPCThreadState::flushCommands()

{

if (mProcess->mDriverFD <= 0)

return;

talkWithDriver(false);

// The flush could have caused post-write refcount decrements to have

// been executed, which in turn could result in BC_RELEASE/BC_DECREFS

// being queued in mOut. So flush again, if we need to.

if (mOut.dataSize() > 0) {

talkWithDriver(false);

}

if (mOut.dataSize() > 0) {

ALOGW("mOut.dataSize() > 0 after flushCommands()");

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

通过 talkWithDriver 将数据发送给驱动,talkWithDriver 在之前文章已做分析,这里不再重复,最终我们的程序会通过系统调用 ioctl 陷入内核,调用栈如下:

ioctl 应用层

-> binder_ioctl 内核层

-> binder_ioctl_write_read

-> binder_thread_write

2

3

4

我们就从 binder_thread_write 开始分析:

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

struct binder_context *context = proc->context;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error.cmd == BR_OK) {

int ret;

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

atomic_inc(&binder_stats.bc[_IOC_NR(cmd)]);

atomic_inc(&proc->stats.bc[_IOC_NR(cmd)]);

atomic_inc(&thread->stats.bc[_IOC_NR(cmd)]);

}

switch (cmd) {

// ......

case BC_REQUEST_DEATH_NOTIFICATION:

case BC_CLEAR_DEATH_NOTIFICATION: {

uint32_t target;

binder_uintptr_t cookie;

struct binder_ref *ref;

struct binder_ref_death *death = NULL;

//拿到目标进程 handle

if (get_user(target, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

//拿到 BpBinder 指针

if (get_user(cookie, (binder_uintptr_t __user *)ptr))

return -EFAULT;

ptr += sizeof(binder_uintptr_t);

if (cmd == BC_REQUEST_DEATH_NOTIFICATION) {

/*

* Allocate memory for death notification

* before taking lock

*/

// 给 binder_ref_death 分配内存

death = kzalloc(sizeof(*death), GFP_KERNEL);

//......

}

binder_proc_lock(proc);

// 从 proc->refs_by_desc 中找到 target(这里就是客户端)对应的 binder_ref 结构体

ref = binder_get_ref_olocked(proc, target, false);

//......

binder_node_lock(ref->node);

if (cmd == BC_REQUEST_DEATH_NOTIFICATION) {

if (ref->death) { //已经注册了死亡回调

//native Bp可注册多个,但Kernel只允许注册一个死亡通知

binder_user_error("%d:%d BC_REQUEST_DEATH_NOTIFICATION death notification already set\n",

proc->pid, thread->pid);

binder_node_unlock(ref->node);

binder_proc_unlock(proc);

kfree(death);

break;

}

binder_stats_created(BINDER_STAT_DEATH);

INIT_LIST_HEAD(&death->work.entry);

death->cookie = cookie; // BpBinder指针,内部保存有死亡回调对象

ref->death = death; // binder_ref_death

if (ref->node->proc == NULL) { // 当目标 binder 服务所在进程已死,则直接发送死亡通知给客户端。这是非常规情况

ref->death->work.type = BINDER_WORK_DEAD_BINDER;

binder_inner_proc_lock(proc);

// 把 binder_ref_death 插入 todo 队列

binder_enqueue_work_ilocked(

&ref->death->work, &proc->todo);

// 唤醒进程去处理

binder_wakeup_proc_ilocked(proc);

binder_inner_proc_unlock(proc);

}

} else {

//......

}

binder_node_unlock(ref->node);

binder_proc_unlock(proc);

} break;

//......

}

return 0;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

- 在当前进程的 proc->refs_by_desc 中找到 AMS 对应的 binder_ref 结构体

- 构建新的 binder_ref_death 结构体,内部 cookie 指针指向传入的 BpBinder 指针,BpBinder 指针内部保存有死亡回调对象

- 将 binder_ref 结构体的 death 指针指向新构建的 binder_ref_death 结构体

- 如果目标 binder 服务所在进程已死,则直接发送死亡通知给客户端

# 死亡通知的触发

当 Binder 服务所在进程死亡后,会释放进程相关的资源,对于 binder 来说,会调用到 binder_release() 函数

static int binder_release(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc = filp->private_data;

debugfs_remove(proc->debugfs_entry);

//向工作队列添加 binder_deferred_work

binder_defer_work(proc, BINDER_DEFERRED_RELEASE);

return 0;

}

2

3

4

5

6

7

8

9

10

接着我们来看看 binder_defer_work 函数的具体实现:

//定义一个工作

static DECLARE_WORK(binder_deferred_work, binder_deferred_func);

static void

binder_defer_work(struct binder_proc *proc, enum binder_deferred_state defer)

{

mutex_lock(&binder_deferred_lock);

// BINDER_DEFERRED_RELEASE 写入 proc->deferred_work

proc->deferred_work |= defer;

if (hlist_unhashed(&proc->deferred_work_node)) {

hlist_add_head(&proc->deferred_work_node,

&binder_deferred_list);

//把我们定义的工作插入内核提供的共享的默认工作队列

//内核会依次读取工作队列中的工作,并执行它们

schedule_work(&binder_deferred_work);

}

mutex_unlock(&binder_deferred_lock);

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

接下来我们来看看具体的工作内容:

static void binder_deferred_func(struct work_struct *work)

{

struct binder_proc *proc;

struct files_struct *files;

int defer;

do {

mutex_lock(&binder_deferred_lock);

if (!hlist_empty(&binder_deferred_list)) {

proc = hlist_entry(binder_deferred_list.first,

struct binder_proc, deferred_work_node);

hlist_del_init(&proc->deferred_work_node);

// BINDER_DEFERRED_RELEASE 从 proc->deferred_work 取出

defer = proc->deferred_work;

proc->deferred_work = 0;

} else {

proc = NULL;

defer = 0;

}

mutex_unlock(&binder_deferred_lock);

files = NULL;

if (defer & BINDER_DEFERRED_PUT_FILES) {

mutex_lock(&proc->files_lock);

files = proc->files;

if (files)

proc->files = NULL;

mutex_unlock(&proc->files_lock);

}

if (defer & BINDER_DEFERRED_FLUSH)

binder_deferred_flush(proc);

if (defer & BINDER_DEFERRED_RELEASE) //走这里

binder_deferred_release(proc); /* frees proc */

if (files)

put_files_struct(files);

} while (proc);

}

// 释放资源

static void binder_deferred_release(struct binder_proc *proc)

{

struct binder_context *context = proc->context;

struct rb_node *n;

int threads, nodes, incoming_refs, outgoing_refs, active_transactions;

BUG_ON(proc->files);

// 删除proc_node节点

mutex_lock(&binder_procs_lock);

hlist_del(&proc->proc_node);

mutex_unlock(&binder_procs_lock);

mutex_lock(&context->context_mgr_node_lock);

if (context->binder_context_mgr_node &&

context->binder_context_mgr_node->proc == proc) {

binder_debug(BINDER_DEBUG_DEAD_BINDER,

"%s: %d context_mgr_node gone\n",

__func__, proc->pid);

context->binder_context_mgr_node = NULL;

}

mutex_unlock(&context->context_mgr_node_lock);

binder_inner_proc_lock(proc);

/*

* Make sure proc stays alive after we

* remove all the threads

*/

proc->tmp_ref++;

//释放binder_thread

proc->is_dead = true;

threads = 0;

active_transactions = 0;

while ((n = rb_first(&proc->threads))) {

struct binder_thread *thread;

thread = rb_entry(n, struct binder_thread, rb_node);

binder_inner_proc_unlock(proc);

threads++;

active_transactions += binder_thread_release(proc, thread);

binder_inner_proc_lock(proc);

}

//释放binder_node

nodes = 0;

incoming_refs = 0;

while ((n = rb_first(&proc->nodes))) {

struct binder_node *node;

node = rb_entry(n, struct binder_node, rb_node);

nodes++;

/*

* take a temporary ref on the node before

* calling binder_node_release() which will either

* kfree() the node or call binder_put_node()

*/

binder_inc_node_tmpref_ilocked(node);

rb_erase(&node->rb_node, &proc->nodes);

binder_inner_proc_unlock(proc);

//重点关注点

incoming_refs = binder_node_release(node, incoming_refs);

binder_inner_proc_lock(proc);

}

binder_inner_proc_unlock(proc);

//释放binder_ref

outgoing_refs = 0;

binder_proc_lock(proc);

while ((n = rb_first(&proc->refs_by_desc))) {

struct binder_ref *ref;

ref = rb_entry(n, struct binder_ref, rb_node_desc);

outgoing_refs++;

binder_cleanup_ref_olocked(ref);

binder_proc_unlock(proc);

binder_free_ref(ref);

binder_proc_lock(proc);

}

binder_proc_unlock(proc);

//释放binder_work

binder_release_work(proc, &proc->todo);

binder_release_work(proc, &proc->delivered_death);

binder_debug(BINDER_DEBUG_OPEN_CLOSE,

"%s: %d threads %d, nodes %d (ref %d), refs %d, active transactions %d\n",

__func__, proc->pid, threads, nodes, incoming_refs,

outgoing_refs, active_transactions);

binder_proc_dec_tmpref(proc);

}

static int binder_node_release(struct binder_node *node, int refs)

{

struct binder_ref *ref;

int death = 0;

struct binder_proc *proc = node->proc;

binder_release_work(proc, &node->async_todo);

binder_node_lock(node);

binder_inner_proc_lock(proc);

binder_dequeue_work_ilocked(&node->work);

/*

* The caller must have taken a temporary ref on the node,

*/

BUG_ON(!node->tmp_refs);

if (hlist_empty(&node->refs) && node->tmp_refs == 1) {

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

binder_free_node(node);

return refs;

}

node->proc = NULL;

node->local_strong_refs = 0;

node->local_weak_refs = 0;

binder_inner_proc_unlock(proc);

spin_lock(&binder_dead_nodes_lock);

hlist_add_head(&node->dead_node, &binder_dead_nodes);

spin_unlock(&binder_dead_nodes_lock);

// 遍历 node->refs 中的每个 binder_ref

hlist_for_each_entry(ref, &node->refs, node_entry) {

refs++;

/*

* Need the node lock to synchronize

* with new notification requests and the

* inner lock to synchronize with queued

* death notifications.

*/

binder_inner_proc_lock(ref->proc);

if (!ref->death) {

binder_inner_proc_unlock(ref->proc);

continue;

}

death++;

// 添加 BINDER_WORK_DEAD_BINDER 事务到客户端进程的 todo 队列中

BUG_ON(!list_empty(&ref->death->work.entry));

ref->death->work.type = BINDER_WORK_DEAD_BINDER;

binder_enqueue_work_ilocked(&ref->death->work,

&ref->proc->todo);

//唤醒客户端

binder_wakeup_proc_ilocked(ref->proc);

binder_inner_proc_unlock(ref->proc);

}

binder_debug(BINDER_DEBUG_DEAD_BINDER,

"node %d now dead, refs %d, death %d\n",

node->debug_id, refs, death);

binder_node_unlock(node);

binder_put_node(node);

return refs;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

接下来客户端被唤醒:

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

binder_inner_proc_lock(proc);

wait_for_proc_work = binder_available_for_proc_work_ilocked(thread);

binder_inner_proc_unlock(proc);

thread->looper |= BINDER_LOOPER_STATE_WAITING;

trace_binder_wait_for_work(wait_for_proc_work,

!!thread->transaction_stack,

!binder_worklist_empty(proc, &thread->todo));

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_restore_priority(current, proc->default_priority);

}

//唤醒等待中的 binder 线程

if (non_block) {

if (!binder_has_work(thread, wait_for_proc_work))

ret = -EAGAIN;

} else {

ret = binder_wait_for_work(thread, wait_for_proc_work);

}

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

if (ret)

return ret;

while (1) {

uint32_t cmd;

struct binder_transaction_data_secctx tr;

struct binder_transaction_data *trd = &tr.transaction_data;

struct binder_work *w = NULL;

struct list_head *list = NULL;

struct binder_transaction *t = NULL;

struct binder_thread *t_from;

size_t trsize = sizeof(*trd);

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;

else if (!binder_worklist_empty_ilocked(&proc->todo) &&

wait_for_proc_work)

list = &proc->todo;

else {

binder_inner_proc_unlock(proc);

/* no data added */

if (ptr - buffer == 4 && !thread->looper_need_return)

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4) {

binder_inner_proc_unlock(proc);

break;

}

//拿到 binder_work

w = binder_dequeue_work_head_ilocked(list);

if (binder_worklist_empty_ilocked(&thread->todo))

thread->process_todo = false;

switch (w->type) {

//......

case BINDER_WORK_DEAD_BINDER:

case BINDER_WORK_DEAD_BINDER_AND_CLEAR:

case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: {

struct binder_ref_death *death;

uint32_t cmd;

binder_uintptr_t cookie;

death = container_of(w, struct binder_ref_death, work);

if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION)

cmd = BR_CLEAR_DEATH_NOTIFICATION_DONE;

else //走这个分支

cmd = BR_DEAD_BINDER

cookie = death->cookie; // cookie 就是之前传入的 BpBinder 指针

binder_debug(BINDER_DEBUG_DEATH_NOTIFICATION,

"%d:%d %s %016llx\n",

proc->pid, thread->pid,

cmd == BR_DEAD_BINDER ?

"BR_DEAD_BINDER" :

"BR_CLEAR_DEATH_NOTIFICATION_DONE",

(u64)cookie);

if (w->type == BINDER_WORK_CLEAR_DEATH_NOTIFICATION) {

binder_inner_proc_unlock(proc);

kfree(death);

binder_stats_deleted(BINDER_STAT_DEATH);

} else { //走这个分支

//把该 binder_work 加入到 delivered_death 队列

binder_enqueue_work_ilocked(

w, &proc->delivered_death);

binder_inner_proc_unlock(proc);

}

//把 BR_DEAD_BINDER 命令返回给应用层

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

//把 BpBinder 指针 返回给应用层

if (put_user(cookie,

(binder_uintptr_t __user *)ptr))

return -EFAULT;

ptr += sizeof(binder_uintptr_t);

binder_stat_br(proc, thread, cmd);

if (cmd == BR_DEAD_BINDER)

goto done; /* DEAD_BINDER notifications can cause transactions */

} break;

}

//......

return 0;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

应用层的响应内核:

status_t IPCThreadState::executeCommand(int32_t cmd)

{

BBinder* obj;

RefBase::weakref_type* refs;

status_t result = NO_ERROR;

switch ((uint32_t)cmd) {

case BR_DEAD_BINDER:

{

BpBinder *proxy = (BpBinder*)mIn.readPointer();

proxy->sendObituary();

mOut.writeInt32(BC_DEAD_BINDER_DONE);

mOut.writePointer((uintptr_t)proxy);

} break;

...

}

...

return result;

}

void BpBinder::sendObituary()

{

ALOGV("Sending obituary for proxy %p handle %d, mObitsSent=%s\n",

this, mHandle, mObitsSent ? "true" : "false");

mAlive = 0;

if (mObitsSent) return;

mLock.lock();

Vector<Obituary>* obits = mObituaries;

if(obits != nullptr) {

ALOGV("Clearing sent death notification: %p handle %d\n", this, mHandle);

IPCThreadState* self = IPCThreadState::self();

//通知内核清空死亡通知

self->clearDeathNotification(mHandle, this);

self->flushCommands();

mObituaries = nullptr;

}

mObitsSent = 1;

mLock.unlock();

ALOGV("Reporting death of proxy %p for %zu recipients\n",

this, obits ? obits->size() : 0U);

if (obits != nullptr) {

const size_t N = obits->size();

for (size_t i=0; i<N; i++) {

//发送死亡通知

reportOneDeath(obits->itemAt(i));

}

delete obits;

}

}

void BpBinder::reportOneDeath(const Obituary& obit)

{

sp<DeathRecipient> recipient = obit.recipient.promote();

ALOGV("Reporting death to recipient: %p\n", recipient.get());

if (recipient == nullptr) return;

//回调死亡通知的方法

recipient->binderDied(this);

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

# 参考资料

- 01

- 004.Vulkan绘制一个三角形3——初始化过程分析202-03

- 02

- 002.Vulkan绘制一个三角形1——概述02-03

- 03

- 003.Vulkan绘制一个三角形2——初始化过程分析102-03