本文系统源码版本:

- AOSP 分支:android-10.0.0_r41

- Kernel 分支:android-goldfish-4.14-gchips

本文依托于Binder 程序示例之 C 语言篇 (opens new window)中介绍的应用层示例程序来对驱动的实现做情景化分析。

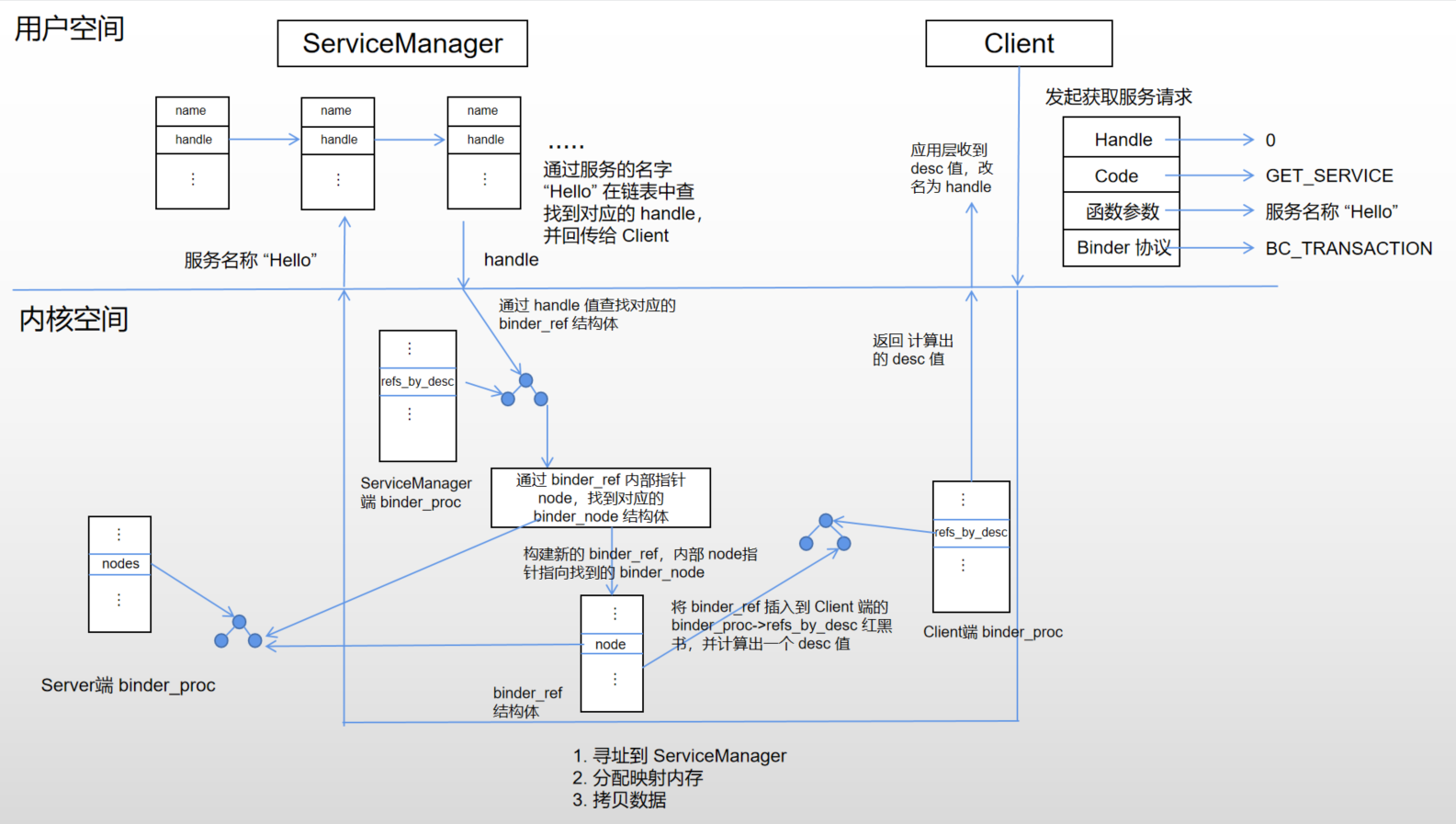

服务获取与使用过程如下:

- client 调用 svcmgr_lookup 向 ServiceManager 获取到 hello 服务的 hanlde 值

- client 构造好 binder_io 数据,binder_call 向 Server 发起远程调用

整个流程如下图所示:

# 1. Client 获取服务过程

binder_client.c :

int main(int argc, char **argv)

{

//......

//获取 hello 服务的 handle 值

g_handle = svcmgr_lookup(bs, svcmgr, "hello");

if (!g_handle) {

return -1;

}

//......

}

2

3

4

5

6

7

8

9

10

11

12

13

14

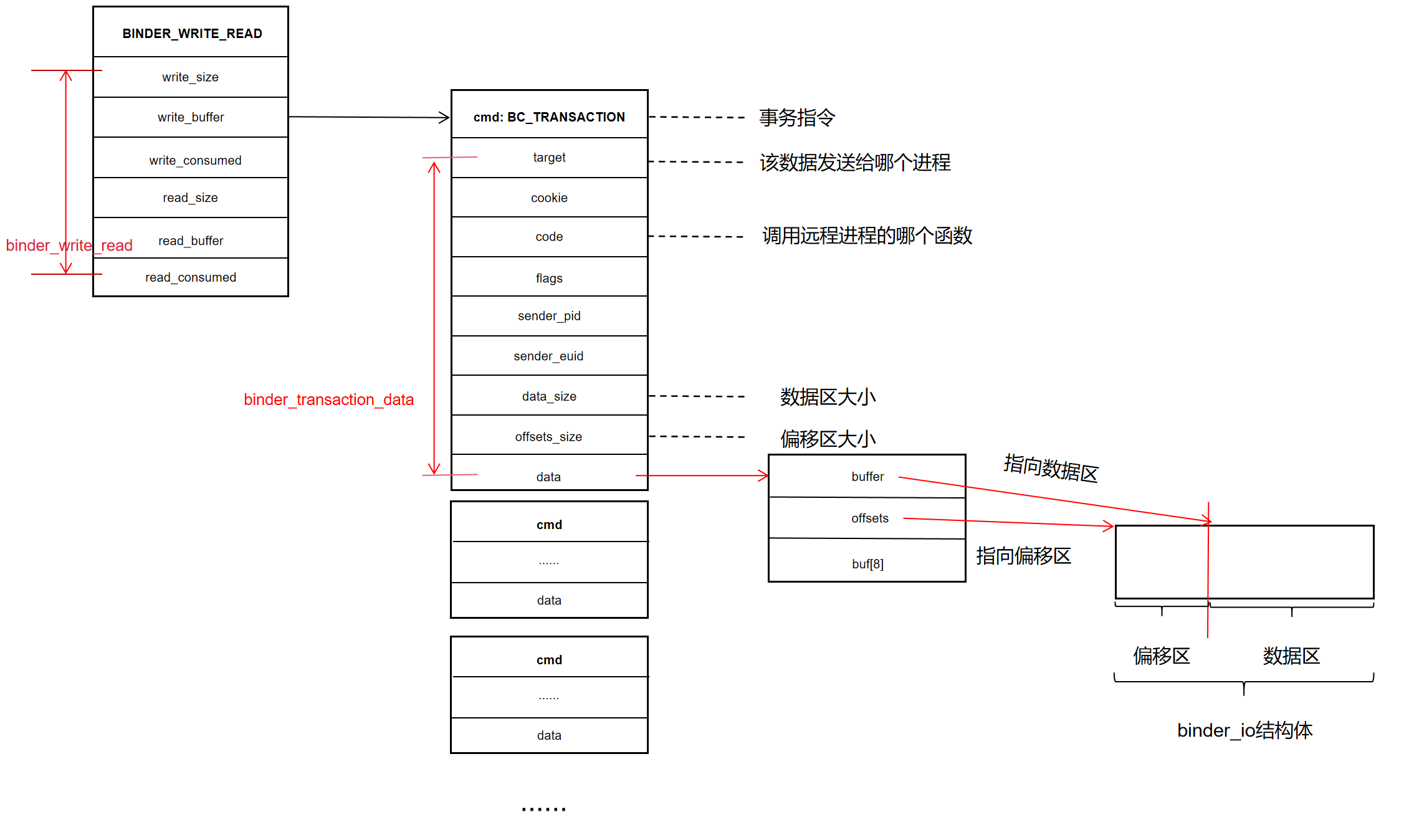

svcmgr_lookup 用于发起远程调用,查找服务的 handle 值,handle 值是服务在内核中的索引,通过 handle 我们就能找到服务所在的目标进程了。

svcmgr_lookup 函数有三个参数:

- struct binder_state *bs : binder_open 返回的 binder 状态值

- target:目标进程的 handle 值,ServiceManager 对应的 handle 值固定为 0

- name:服务的名字

/**

* 根据服务的名字(name),返回服务在内核中的 handle 值

* target:目标服务的 handle 值

* name:服务的名字

*/

uint32_t svcmgr_lookup(struct binder_state *bs, uint32_t target, const char *name)

{

uint32_t handle;

unsigned iodata[512/4];

struct binder_io msg, reply;

bio_init(&msg, iodata, sizeof(iodata), 4);

//第一个数据 0

bio_put_uint32(&msg, 0); // strict mode header

//"android.os.IServiceManager"

bio_put_string16_x(&msg, SVC_MGR_NAME);

//服务的名字 "hello"

bio_put_string16_x(&msg, name);

//发起远程过程调用

//调用的方法是 SVC_MGR_CHECK_SERVICE

//client 端开始休眠

if (binder_call(bs, &msg, &reply, target, SVC_MGR_CHECK_SERVICE))

return 0;

//......

}

int binder_call(struct binder_state *bs,

struct binder_io *msg, struct binder_io *reply,

uint32_t target, uint32_t code)

{

int res;

struct binder_write_read bwr;

struct {

uint32_t cmd;

struct binder_transaction_data txn;

} __attribute__((packed)) writebuf;

unsigned readbuf[32];

if (msg->flags & BIO_F_OVERFLOW) {

fprintf(stderr,"binder: txn buffer overflow\n");

goto fail;

}

writebuf.cmd = BC_TRANSACTION;

//注意这里,target 的值是 0,表示要访问 ServiceManager

writebuf.txn.target.handle = target;

writebuf.txn.code = code;

writebuf.txn.flags = 0;

writebuf.txn.data_size = msg->data - msg->data0;

writebuf.txn.offsets_size = ((char*) msg->offs) - ((char*) msg->offs0);

writebuf.txn.data.ptr.buffer = (uintptr_t)msg->data0;

writebuf.txn.data.ptr.offsets = (uintptr_t)msg->offs0;

bwr.write_size = sizeof(writebuf);

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) &writebuf;

hexdump(msg->data0, msg->data - msg->data0);

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder: ioctl failed (%s)\n", strerror(errno));

goto fail;

}

res = binder_parse(bs, reply, (uintptr_t) readbuf, bwr.read_consumed, 0);

if (res == 0) {

return 0;

}

if (res < 0) {

goto fail;

}

}

fail:

memset(reply, 0, sizeof(*reply));

reply->flags |= BIO_F_IOERROR;

return -1;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

和上一篇中分析的文章类似,程序陷入内核,调用流程如下:

ioctl(bs->fd, BINDER_WRITE_READ, &bwr)

-> vfs

-> binder_ioctl

-> binder_ioctl_write_read

-> binder_thread_write

-> binder_transaction

2

3

4

5

6

7

内核部分的操作主要包括了:

- 分配映射内存

- 拷贝数据,处理数据

- 唤醒目标进程

这部分内容和服务注册过程是一致的,差异点主要是调用的远程方法不同,其他都是一致的,具体内容可以参考Binder 驱动情景分析之服务注册过程 (opens new window)的第 3 节。这里我们不再重复讲解。

内核部分处理完成后,就会唤醒 ServiceManager,在用户层 ServiceManager 就会解析收到的数据,我们先看看我们收到的数据的具体格式:

内核部分代码执行完成后,ServiceManager 就会被唤醒:

binder_thread_read

-> binder_ioctl_write_read

-> binder_ioctl

-> ioctl (回到应用层)

-> binder_loop

2

3

4

5

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

//关注点 1

//从这里被唤醒,获取到数据 bwr

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

//解析数据

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

ServiceManager 在关注点 1 处被唤醒,接着就是:

- 解析收到的数据

- 调用回调函数

- 根据收到的数据内容,调用对应的远程函数

- 返回数据

接下来我们来回顾一下这部分内容:

binder_parse 函数解析收到的数据:

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

//可能存在多组数据,通过 while 循环将每个数据解析完后再退出循环

while (ptr < end) {

//获取到命令 cmd,不同的 cmd 执行不同的操作

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

switch(cmd) {

//......

//会走到这个分支

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION: {

struct binder_transaction_data_secctx txn;

if (cmd == BR_TRANSACTION_SEC_CTX) {

//......

} else /* BR_TRANSACTION */ { //代码会走这里

if ((end - ptr) < sizeof(struct binder_transaction_data)) {

ALOGE("parse: txn too small (binder_transaction_data)!\n");

return -1;

}

//解析出 binder_transaction_data 结构体

memcpy(&txn.transaction_data, (void*) ptr, sizeof(struct binder_transaction_data));

ptr += sizeof(struct binder_transaction_data);

txn.secctx = 0;

}

//......

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

//进一步解析数据

//解析出 binder_io 结构体

bio_init_from_txn(&msg, &txn.transaction_data);

//调用回调函数

res = func(bs, &txn, &msg, &reply);

//回复数据

if (txn.transaction_data.flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn.transaction_data.data.ptr.buffer);

} else {

binder_send_reply(bs, &reply, txn.transaction_data.data.ptr.buffer, res);

}

}

break;

}

//......

default:

ALOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

上面的代码走到 res = func(bs, &txn, &msg, &reply); 时就会执行到 binder_loop 传入的回调函数 svcmgr_handler:

//已删减部分不相关代码

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data_secctx *txn_secctx,

struct binder_io *msg,

struct binder_io *reply)

{

//...... 省略部分代码

//根据 code 值调用不同的方法

//当前场景下 code == SVC_MGR_CHECK_SERVICE

switch(txn->code) {

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

//s 是服务的名字 hello

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

//从链表 svclist 中找到服务,返回服务的 handle

handle = do_find_service(s, len, txn->sender_euid, txn->sender_pid,

(const char*) txn_secctx->secctx);

if (!handle)

break;

//将 handle 写入 reply

bio_put_ref(reply, handle);

return 0;

//......

default:

ALOGE("unknown code %d\n", txn->code);

return -1;

}

bio_put_uint32(reply, 0);

return 0;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

需要注意这里使用 bio_put_ref 来写入数据的:

void bio_put_ref(struct binder_io *bio, uint32_t handle)

{

struct flat_binder_object *obj;

if (handle)

obj = bio_alloc_obj(bio);

else

obj = bio_alloc(bio, sizeof(*obj));

if (!obj)

return;

obj->flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

obj->hdr.type = BINDER_TYPE_HANDLE;

obj->handle = handle;

obj->cookie = 0;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

这里会构建一个 flat_binder_object 结构体在数据中,进入内核后,会对其做处理。

接下来调用 binder_send_reply 将 reply 返回给 Client:

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{

//构建了两个数据块

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

//第一个命令告知驱动清理内存

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

//第二个命令给 client 发送 reply 数据

//发送 BC_REPLY 接收方会收到 BR_REPLY

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

data.txn.flags = TF_STATUS_CODE;

data.txn.data_size = sizeof(int);

data.txn.offsets_size = 0;

data.txn.data.ptr.buffer = (uintptr_t)&status;

data.txn.data.ptr.offsets = 0;

} else { //svcmgr_handler 返回0, 走这里

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

//发起写操作

binder_write(bs, &data, sizeof(data));

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

接着又会陷入内核,调用栈如下:

ioctl(bs->fd, BINDER_WRITE_READ, &bwr)

-> vfs

-> binder_ioctl_write_read

-> binder_thread_write

-> binder_transaction

2

3

4

5

6

内核态的流程和服务注册过程是一致的,具体内容可以参考Binder 驱动情景分析之服务注册过程 (opens new window)的第 5 节。这里我们看下主要差异点,就是 handle 的处理:

//发起数据传输

binder_transaction(proc, thread, &tr,cmd == BC_REPLY, 0);

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

{

//......

for (buffer_offset = off_start_offset; buffer_offset < off_end_offset;

buffer_offset += sizeof(binder_size_t)) { //每次读 64 字节

struct binder_object_header *hdr;

size_t object_size;

struct binder_object object;

binder_size_t object_offset;

binder_alloc_copy_from_buffer(&target_proc->alloc,

&object_offset,

t->buffer,

buffer_offset,

sizeof(object_offset));

object_size = binder_get_object(target_proc, t->buffer,

object_offset, &object);

if (object_size == 0 || object_offset < off_min) {

binder_user_error("%d:%d got transaction with invalid offset (%lld, min %lld max %lld) or object.\n",

proc->pid, thread->pid,

(u64)object_offset,

(u64)off_min,

(u64)t->buffer->data_size);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_offset;

}

hdr = &object.hdr;

off_min = object_offset + object_size;

switch (hdr->type) {

case BINDER_TYPE_HANDLE: //走这个分支

case BINDER_TYPE_WEAK_HANDLE: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_handle(fp, t, thread);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

return_error_param = ret;

return_error_line = __LINE__;

goto err_translate_failed;

}

binder_alloc_copy_to_buffer(&target_proc->alloc,

t->buffer, object_offset,

fp, sizeof(*fp));

} break;

//......

default:

binder_user_error("%d:%d got transaction with invalid object type, %x\n",

proc->pid, thread->pid, hdr->type);

return_error = BR_FAILED_REPLY;

return_error_param = -EINVAL;

return_error_line = __LINE__;

goto err_bad_object_type;

}

}

//......

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

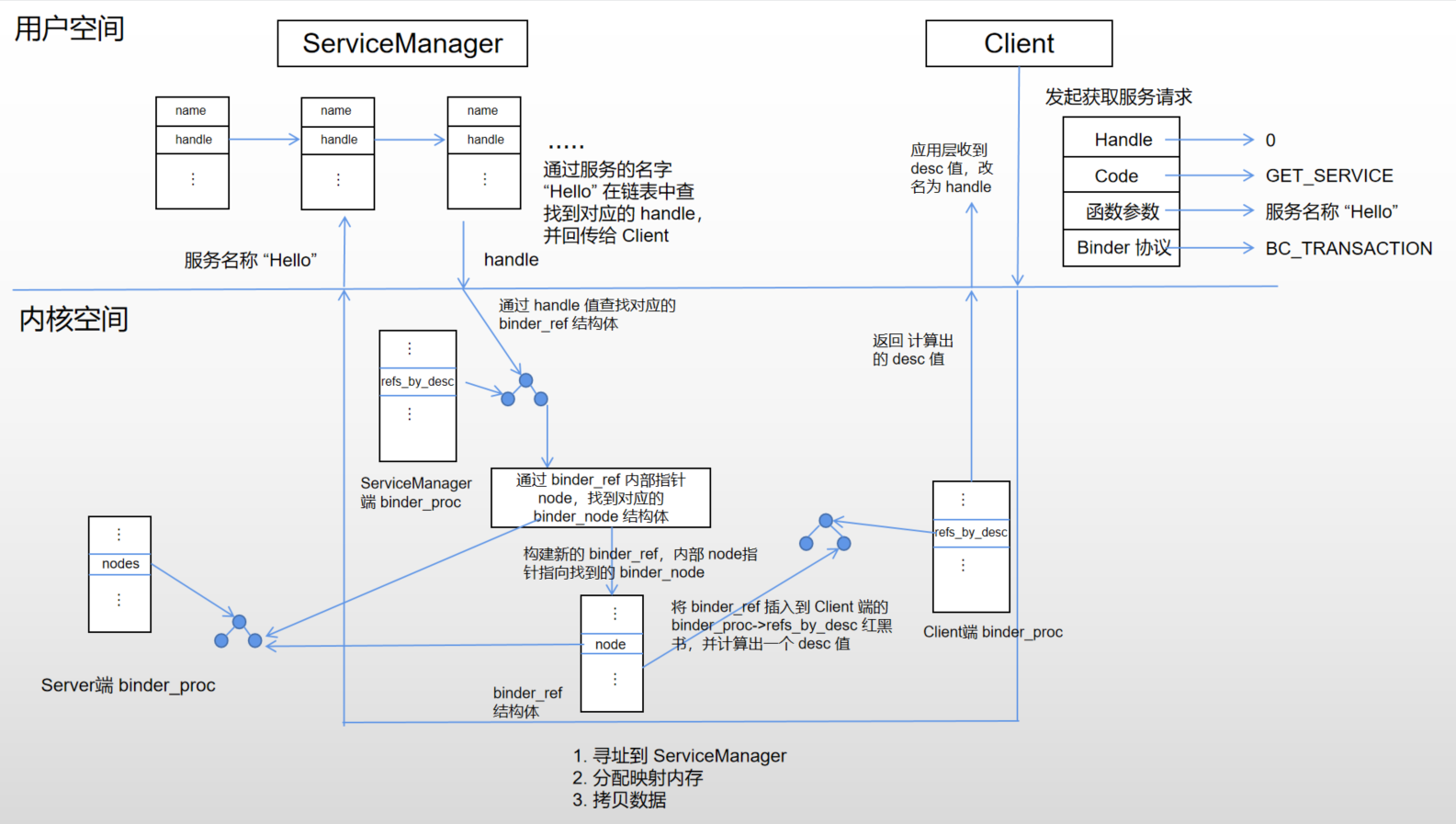

这里会对 bio_put_ref 方法构建的数据进行处理:

- 找到 handle 对应的 binder_node

- 创建新的 binder_ref 结构体,并插入 target_proc 的 refs_by_desc 红黑树中

- 计算出新的 desc 值

- 新的 desc 值保存在数据中,返回给应用层

static int binder_translate_handle(struct flat_binder_object *fp,

struct binder_transaction *t,

struct binder_thread *thread)

{

struct binder_proc *proc = thread->proc;

struct binder_proc *target_proc = t->to_proc;

struct binder_node *node;

struct binder_ref_data src_rdata;

int ret = 0;

//找到 handle 对应的 binder_node

node = binder_get_node_from_ref(proc, fp->handle,

fp->hdr.type == BINDER_TYPE_HANDLE, &src_rdata);

if (!node) {

binder_user_error("%d:%d got transaction with invalid handle, %d\n",

proc->pid, thread->pid, fp->handle);

return -EINVAL;

}

if (security_binder_transfer_binder(proc->tsk, target_proc->tsk)) {

ret = -EPERM;

goto done;

}

binder_node_lock(node);

if (node->proc == target_proc) {

//.....

} else { //走这个分支

struct binder_ref_data dest_rdata;

binder_node_unlock(node);

//创建新的 binder_ref 结构体,并插入 target_proc 的 refs_by_desc 红黑树中

// 计算出新的 desc 值(对应应用层的 handle),保存到 dest_rdata 中

ret = binder_inc_ref_for_node(target_proc, node,

fp->hdr.type == BINDER_TYPE_HANDLE,

NULL, &dest_rdata);

if (ret)

goto done;

fp->binder = 0;

fp->handle = dest_rdata.desc; //新的 desc 值保存在数据中,返回给应用层

fp->cookie = 0;

trace_binder_transaction_ref_to_ref(t, node, &src_rdata,

&dest_rdata);

binder_debug(BINDER_DEBUG_TRANSACTION,

" ref %d desc %d -> ref %d desc %d (node %d)\n",

src_rdata.debug_id, src_rdata.desc,

dest_rdata.debug_id, dest_rdata.desc,

node->debug_id);

}

done:

binder_put_node(node);

return ret;

}

static struct binder_node *binder_get_node_from_ref(

struct binder_proc *proc,

u32 desc, bool need_strong_ref,

struct binder_ref_data *rdata)

{

struct binder_node *node;

struct binder_ref *ref;

binder_proc_lock(proc);

ref = binder_get_ref_olocked(proc, desc, need_strong_ref);

if (!ref)

goto err_no_ref;

node = ref->node;

/*

* Take an implicit reference on the node to ensure

* it stays alive until the call to binder_put_node()

*/

binder_inc_node_tmpref(node);

if (rdata)

*rdata = ref->data;

binder_proc_unlock(proc);

return node;

err_no_ref:

binder_proc_unlock(proc);

return NULL;

}

static struct binder_ref *binder_get_ref_olocked(struct binder_proc *proc,

u32 desc, bool need_strong_ref)

{

struct rb_node *n = proc->refs_by_desc.rb_node;

struct binder_ref *ref;

while (n) {

ref = rb_entry(n, struct binder_ref, rb_node_desc);

if (desc < ref->data.desc) {

n = n->rb_left;

} else if (desc > ref->data.desc) {

n = n->rb_right;

} else if (need_strong_ref && !ref->data.strong) {

binder_user_error("tried to use weak ref as strong ref\n");

return NULL;

} else {

return ref;

}

}

return NULL;

}

static int binder_inc_ref_for_node(struct binder_proc *proc,

struct binder_node *node,

bool strong,

struct list_head *target_list,

struct binder_ref_data *rdata)

{

struct binder_ref *ref;

struct binder_ref *new_ref = NULL;

int ret = 0;

binder_proc_lock(proc);

ref = binder_get_ref_for_node_olocked(proc, node, NULL);

if (!ref) {

binder_proc_unlock(proc);

new_ref = kzalloc(sizeof(*ref), GFP_KERNEL);

if (!new_ref)

return -ENOMEM;

binder_proc_lock(proc);

ref = binder_get_ref_for_node_olocked(proc, node, new_ref);

}

ret = binder_inc_ref_olocked(ref, strong, target_list);

*rdata = ref->data;

binder_proc_unlock(proc);

if (new_ref && ref != new_ref)

/*

* Another thread created the ref first so

* free the one we allocated

*/

kfree(new_ref);

return ret;

}

static struct binder_ref *v binder_get_ref_for_node_olocked(

struct binder_proc *proc,

struct binder_node *node,

struct binder_ref *new_ref)

{

struct binder_context *context = proc->context;

struct rb_node **p = &proc->refs_by_node.rb_node;

struct rb_node *parent = NULL;

struct binder_ref *ref;

struct rb_node *n;

while (*p) {

parent = *p;

ref = rb_entry(parent, struct binder_ref, rb_node_node);

if (node < ref->node)

p = &(*p)->rb_left;

else if (node > ref->node)

p = &(*p)->rb_right;

else

return ref;

}

if (!new_ref)

return NULL;

binder_stats_created(BINDER_STAT_REF);

new_ref->data.debug_id = atomic_inc_return(&binder_last_id);

new_ref->proc = proc;

new_ref->node = node;

rb_link_node(&new_ref->rb_node_node, parent, p);

rb_insert_color(&new_ref->rb_node_node, &proc->refs_by_node);

new_ref->data.desc = (node == context->binder_context_mgr_node) ? 0 : 1;

for (n = rb_first(&proc->refs_by_desc); n != NULL; n = rb_next(n)) {

ref = rb_entry(n, struct binder_ref, rb_node_desc);

if (ref->data.desc > new_ref->data.desc)

break;

new_ref->data.desc = ref->data.desc + 1;

}

p = &proc->refs_by_desc.rb_node;

while (*p) {

parent = *p;

ref = rb_entry(parent, struct binder_ref, rb_node_desc);

if (new_ref->data.desc < ref->data.desc)

p = &(*p)->rb_left;

else if (new_ref->data.desc > ref->data.desc)

p = &(*p)->rb_right;

else

BUG();

}

rb_link_node(&new_ref->rb_node_desc, parent, p);

rb_insert_color(&new_ref->rb_node_desc, &proc->refs_by_desc);

binder_node_lock(node);

hlist_add_head(&new_ref->node_entry, &node->refs);

binder_debug(BINDER_DEBUG_INTERNAL_REFS,

"%d new ref %d desc %d for node %d\n",

proc->pid, new_ref->data.debug_id, new_ref->data.desc,

node->debug_id);

binder_node_unlock(node);

return new_ref;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

Server 端在完成写操作后,会立即进入读操作,并阻塞:

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

//......

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

//写完以后接着读返回的信息,阻塞在这里

//ServiceManager 将其从这里唤醒

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&proc->todo))

binder_wakeup_proc_ilocked(proc);

binder_inner_proc_unlock(proc);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

接下来 Client 端被唤醒:

binder_thread_read

->binder_ioctl_write_read

-> ioctl (回到应用层)

-> binder_call

2

3

4

int binder_call(struct binder_state *bs,

struct binder_io *msg, struct binder_io *reply,

uint32_t target, uint32_t code)

{

//......

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

//从这里唤醒

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder: ioctl failed (%s)\n", strerror(errno));

goto fail;

}

//解析收到的数据

res = binder_parse(bs, reply, (uintptr_t) readbuf, bwr.read_consumed, 0);

if (res == 0) return 0;

if (res < 0) goto fail;

}

//......

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

解析收到的数据数据过程:

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

switch(cmd) {

//......

//代码走这里

case BR_REPLY: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: reply too small!\n");

return -1;

}

binder_dump_txn(txn);

if (bio) {

//将数据解析到 bio 中,也就是传入的 reply

bio_init_from_txn(bio, txn);

bio = 0;

} else {

/* todo FREE BUFFER */

}

ptr += sizeof(*txn);

r = 0;

break;

}

default:

ALOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

解析出 reply 后,代码回到 binder_call,binder_call 接着又返回到 svcmgr_lookup:

uint32_t svcmgr_lookup(struct binder_state *bs, uint32_t target, const char *name)

{

uint32_t handle;

unsigned iodata[512/4];

struct binder_io msg, reply;

bio_init(&msg, iodata, sizeof(iodata), 4);

bio_put_uint32(&msg, 0); // strict mode header

bio_put_string16_x(&msg, SVC_MGR_NAME);

bio_put_string16_x(&msg, name);

//从这里返回

//handle 值已写入 reply

if (binder_call(bs, &msg, &reply, target, SVC_MGR_CHECK_SERVICE))

return 0;

//从 reply 中解析出 handle

handle = bio_get_ref(&reply);

if (handle)

binder_acquire(bs, handle);

//告知驱动,binder 调用已完成

binder_done(bs, &msg, &reply);

return handle;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

到这里,client 获取到 hello 服务的 handle 值,服务请求阶段完毕。

整个过程如下图所示:

# 2. 服务的使用过程

服务的使用过程就是调用 Client 端 sayhello 的过程:

void sayhello(void)

{

unsigned iodata[512/4];

struct binder_io msg, reply;

/* 构造binder_io */

bio_init(&msg, iodata, sizeof(iodata), 4);

/* 放入参数 */

bio_put_uint32(&msg, 0); // strict mode header

bio_put_string16_x(&msg, "IHelloService");

/* 调用binder_call */

if (binder_call(g_bs, &msg, &reply, g_handle, HELLO_SVR_CMD_SAYHELLO))

return ;

/* 从reply中解析出返回值 */

binder_done(g_bs, &msg, &reply);

}

int binder_call(struct binder_state *bs,

struct binder_io *msg, struct binder_io *reply,

uint32_t target, uint32_t code)

{

int res;

struct binder_write_read bwr;

struct {

uint32_t cmd;

struct binder_transaction_data txn;

} __attribute__((packed)) writebuf;

unsigned readbuf[32];

if (msg->flags & BIO_F_OVERFLOW) {

fprintf(stderr,"binder: txn buffer overflow\n");

goto fail;

}

writebuf.cmd = BC_TRANSACTION;

writebuf.txn.target.handle = target;

writebuf.txn.code = code;

writebuf.txn.flags = 0;

writebuf.txn.data_size = msg->data - msg->data0;

writebuf.txn.offsets_size = ((char*) msg->offs) - ((char*) msg->offs0);

writebuf.txn.data.ptr.buffer = (uintptr_t)msg->data0;

writebuf.txn.data.ptr.offsets = (uintptr_t)msg->offs0;

bwr.write_size = sizeof(writebuf);

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) &writebuf;

hexdump(msg->data0, msg->data - msg->data0);

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder: ioctl failed (%s)\n", strerror(errno));

goto fail;

}

res = binder_parse(bs, reply, (uintptr_t) readbuf, bwr.read_consumed, 0);

if (res == 0) {

return 0;

}

if (res < 0) {

goto fail;

}

}

fail:

memset(reply, 0, sizeof(*reply));

reply->flags |= BIO_F_IOERROR;

return -1;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

构造好 binder_io 数据,调用 binder_call 发起远程调用,客户端陷入内核,调用栈如下:

ioctl(bs->fd, BINDER_WRITE_READ, &bwr)

-> vfs

-> binder_ioctl

-> binder_ioctl_write_read

-> binder_thread_write

-> binder_transaction

2

3

4

5

6

7

这里的流程和Binder 驱动情景分析之服务注册过程 (opens new window)的第 3 节基本一致,主要差异点在 binder 寻址上:

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

{

//......

if (reply) {

//......

} else {

if (tr->target.handle) { //handle 不为 0,进入分支

struct binder_ref *ref;

/*

* There must already be a strong ref

* on this node. If so, do a strong

* increment on the node to ensure it

* stays alive until the transaction is

* done.

*/

binder_proc_lock(proc);

// 关注点1 通过 handle 在 binder_proc 的 红黑树中找到 binder_ref 结构体

ref = binder_get_ref_olocked(proc, tr->target.handle,

true);

if (ref) {

//关注点2 通过 binder_ref 找到目标进程对应的 binder_proc 结构体

target_node = binder_get_node_refs_for_txn(

ref->node, &target_proc,

&return_error);

} else {

binder_user_error("%d:%d got transaction to invalid handle\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

}

binder_proc_unlock(proc);

} else {

//......

}

//.....

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

我们首先看下关注点 1 的具体实现:

static struct binder_ref *binder_get_ref_olocked(struct binder_proc *proc,

u32 desc, bool need_strong_ref)

{

struct rb_node *n = proc->refs_by_desc.rb_node;

struct binder_ref *ref;

while (n) {

ref = rb_entry(n, struct binder_ref, rb_node_desc);

if (desc < ref->data.desc) {

n = n->rb_left;

} else if (desc > ref->data.desc) {

n = n->rb_right;

} else if (need_strong_ref && !ref->data.strong) {

binder_user_error("tried to use weak ref as strong ref\n");

return NULL;

} else {

return ref;

}

}

return NULL;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

这里我们通过 handle 在 proc->refs_by_desc 红黑树中查找到对应的 binder_ref 结构体。

接着我们看看关注 2 处的具体实现:

//调用方式

//ref->node 通过 binder_ref 的 node 指针拿到目标服务对应的 binder_node

target_node = binder_get_node_refs_for_txn(ref->node, &target_proc,&return_error);

//具体实现

static struct binder_node *binder_get_node_refs_for_txn(

struct binder_node *node,

struct binder_proc **procp,

uint32_t *error)

{

struct binder_node *target_node = NULL;

binder_node_inner_lock(node);

if (node->proc) {

target_node = node;

binder_inc_node_nilocked(node, 1, 0, NULL);

binder_inc_node_tmpref_ilocked(node);

node->proc->tmp_ref++;

//关键代码就这一句

*procp = node->proc;

} else

*error = BR_DEAD_REPLY;

binder_node_inner_unlock(node);

return target_node;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

代码很多,其实核心流程就两步,通过 binder_ref 的 node 指针拿到目标服务对应的 binder_node,再通过 binder_node 的 proc 指针拿到目标进程对应的 binder_proc 结构体。

内核中 binder_transaction 执行完毕后,接着 Server 端从 binder_loop 中唤醒,进入到回调函数 test_server_handler 中:

binder_loop(bs, test_server_handler);

int test_server_handler(struct binder_state *bs,

struct binder_transaction_data_secctx *txn_secctx,

struct binder_io *msg,

struct binder_io *reply)

{

struct binder_transaction_data *txn = &txn_secctx->transaction_data;

int (*handler)(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply);

//txn->target.ptr == svcmgr_publish 传入的hello_service_handler

handler = (int (*)(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply))txn->target.ptr;

//调用 hello_service_handler

return handler(bs, txn, msg, reply);

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

接着调用 hello_service_handler 函数,根据传入的 code == HELLO_SVR_CMD_SAYHELLO 调用到函数 sayHello

int hello_service_handler(struct binder_state *bs,

struct binder_transaction_data_secctx *txn_secctx,

struct binder_io *msg,

struct binder_io *reply)

{

struct binder_transaction_data *txn = &txn_secctx->transaction_data;

/* 根据txn->code知道要调用哪一个函数

* 如果需要参数, 可以从msg取出

* 如果要返回结果, 可以把结果放入reply

*/

/* sayhello

* sayhello_to

*/

uint16_t *s;

char name[512];

size_t len;

//uint32_t handle;

uint32_t strict_policy;

int i;

// Equivalent to Parcel::enforceInterface(), reading the RPC

// header with the strict mode policy mask and the interface name.

// Note that we ignore the strict_policy and don't propagate it

// further (since we do no outbound RPCs anyway).

strict_policy = bio_get_uint32(msg);

switch(txn->code) {

case HELLO_SVR_CMD_SAYHELLO:

//调用 sayhello

sayhello();

//写入返回值 0

bio_put_uint32(reply, 0); /* no exception */

return 0;

//...... 省略

default:

fprintf(stderr, "unknown code %d\n", txn->code);

return -1;

}

return 0;

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

接着返回到 binder_parse 中,在调用 binder_send_reply 将数据返回给 client:

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

//......

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, &txn.transaction_data);

res = func(bs, &txn, &msg, &reply);

if (txn.transaction_data.flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn.transaction_data.data.ptr.buffer);

} else {

//走这里,返回数据

binder_send_reply(bs, &reply, txn.transaction_data.data.ptr.buffer, res);

}

}

break;

//......

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

binder_send_reply 陷入内核中的流程与Binder 驱动情景分析之服务注册过程 (opens new window)的第 5 节一致,这里不再重复讲解。

接着 client 收到数据,解析出返回值,Server 进入下一个循环,整个远程过程调用结束。

# 参考资料

- 《Android 框架解密》

- Binder系列1—Binder Driver初探 (opens new window)

- Android源码分析 - Binder驱动(中) (opens new window)

- Android源码分析 - Binder驱动(下) (opens new window)

- 理解Android Binder机制(1/3):驱动篇 (opens new window)

- 更好的 Shrinker 机制 (opens new window)

- Android Binder通信数据结构介绍 (opens new window)

- Android Binder 魅族团队 (opens new window)